Artificial intelligence (AI) is a concept regularly greeted with trepidation. On a surface level, many of us are wary of the growing power of our own machines – and the mainstream media has too often perpetuated these fears. Eye-catching headlines and wild projections about AI have had us nervously pondering our futures.

Those working in business technology have slowly learned to cut through the hyperbole. Industrial AI use cases are being integrated across the enterprise, bringing with them transformative benefits to productivity, efficiency and innovation. Organisations have never been more eager to shout about their work in AI.

As a single entity, however, AI is actually near impossible to define. After all, the term ‘artificial intelligence’ has been in our lexicon for more than 60 years. Over that time, it has acted as a label for countless experiments and solutions around cognitive machinery. Many of these fell by the wayside during ‘AI winters’, where funding shrunk and priorities changed.

Today’s enterprise leaders are the lucky ones. The technologies themselves are finally reaching maturity, defining identities of their own under the catch-all umbrella of AI. One such subfield is ‘deep learning’, which takes the idea of human-like machines to a scarcely believable level.

DEEP LEARNING, DEFINED

Deep learning is itself a subset of machine learning, the strand of AI which is now driving huge advances for many businesses. Machine learning algorithms work within a set framework of features, giving the system the opportunity to learn from experience and therefore perform tasks more effectively. Deep learning algorithms are structured differently, closely reflecting the neutral layout of the human brain.

‘Neural networks’ have been studied since the middle of the 20th century but have come to prominence in enterprise with the proliferation of data and rapid growth of computing power. The multi-layered nature of these networks allow structured, unstructured or inter-connected data sets to be utilised for the system’s gain. Deep learning requires enormous amounts of data; the more information available to a model, the better it will perform.

McKinsey & Company’s 2018 white paper on the significant economic potential of advanced AI identified the following three variations of the neural network:

Feed forward neural networks: The simplest type of neural network. In this architecture, information moves in only one direction, forward, from the input layer, through the “hidden” layers, to the output layer. There are no loops in the network. The first single-neuron network was proposed already in 1958 by AI pioneer Frank Rosenblatt. While the idea is not new, advances in computing power, training algorithms and the available data led to higher levels of performance than previously possible.

Recurrent neural networks (RNNs): Artificial neural networks whose connections between neurons include loops, well-suited for processing sequences of inputs. In November 2016, Oxford University researchers reported that a system based on recurrent neural networks (and convolutional neural networks) had achieved 95% accuracy in reading lips, outperforming experienced human lip readers, who tested at 52% accuracy.

Convolutional neural networks (CNNs): Artificial neural networks in which the connections between neural layers are inspired by the organisation of the animal visual cortex, the portion of the brain that processes images, well suited for perceptual tasks.

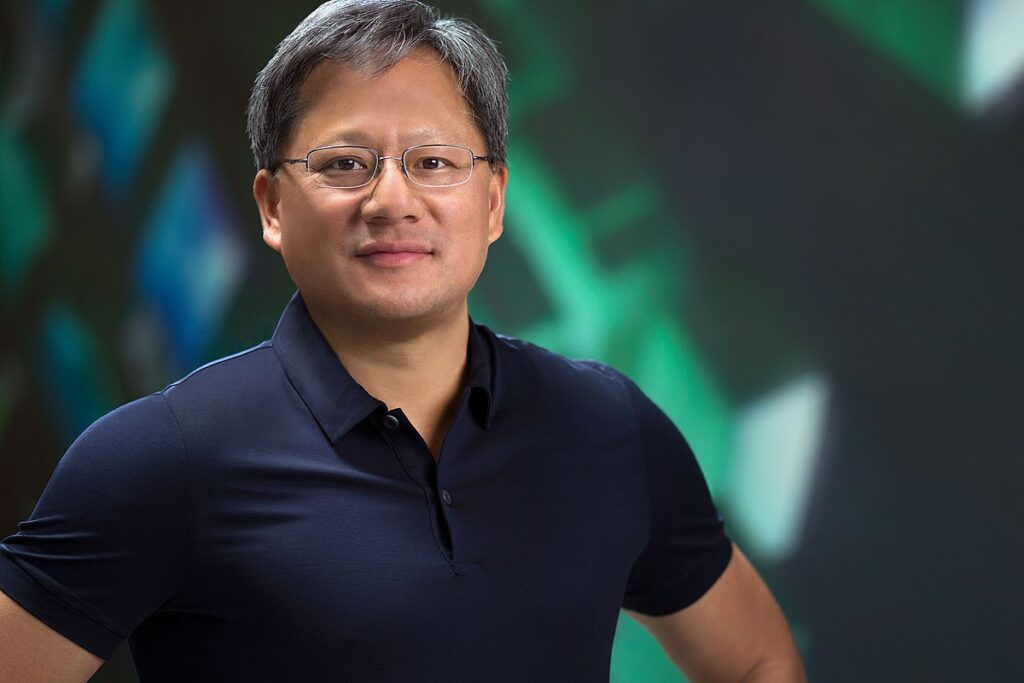

"Every single software developer has to learn deep learning" - Jensen Huang, CEO, NVIDIA

MAKING THE CASE

Deep learning use cases are now springing up at startup level, within global organisations and everywhere in between. Leaders across industries now have little choice but to embrace technology, and their biggest returns often come with investment in AI-based solutions.

As previously outlined, deep learning techniques are proving revolutionary in image recognition, speech recognition and natural language processing. Conversational interfaces are proving valuable tools for businesses as well as consumers, with giants like Facebook and Google putting voice at the core of development strategies. The likes of Amazon’s Alexa will only grow smarter and more life-like as the software learns from input.

Real-world examples aren’t restricted to this area, however, as deep learning models match with an ever-more diverse range of data sets. NVIDIA, the US-based technology company with a strong focus on bringing deep learning to the enterprise, has worked on a range of engagements with companies from different sectors.

In the oil & gas industry, for example, Eni and NVIDIA – through its partnership with HPE – have built reverse time migration algorithms to create an accurate image of the Earth’s subsurface, enabling Eni to search for hydrocarbons. In the healthcare sector, Insilico Medicine has leveraged NVIDIA’s GPU accelerators in its DeepPharma platform. DeepPharma utilises deep learning for drug repurposing and discovery in cancer and age-related diseases. In cybersecurity, Accenture Labs is able to detect threats by analysing anomalies in large-scale network graphs using GPUs and GPU-accelerated libraries.

Three other sectors in line for further disruption because of AI, according to McKinsey, are retail, sales and marketing, supply chain management and banking. More specifically, a key strength of deep learning technology is the role it can play in refining predictive maintenance techniques by detecting anomalies in data – and companies operating in the automotive assembly and wider manufacturing markets are the beneficiaries.

NVIDIA’s co-founder and CEO Jensen Huang has long been advocate of deep learning methods. Back in 2017, he committed to training 100,000 developers on deep learning and, in October last year, the company launched its RAPIDS open-source platform for large-scale data analytics and machine learning.

“AI is going to infuse all of software,” said Huang. “AI is going to eat software and it’s going to be in every aspect of software. Every single software developer has to learn deep learning.”

CHALLENGES OF ADOPTION

Huang’s ambition might be admirable, but he will have encountered a predictable issue on his quest for deep learning ubiquity: a skills gap. Quite simply, the data and AI markets are accelerating at a rate with which employers and educators cannot keep up. This problem is amplified when it comes to deep learning, where the technology is even more complex.

Aside from this familiar challenge and other normal hindrances to integration such as legacy structures, lack of roadmapping and deployment costs, deep learning adopters face a particular set of hurdles. A recent article by Forbes cited three key challenges alongside skills as incoherent strategic approaches, the interpretability of the data and a shortage of data.

"Even with an appropriate set of guiding principles, there are going to be a lot of perceptual challenges. They are way beyond those that current developers have solved with deep learning networks" - Rodney Brooks, Robotics Pioneer on autonomous driving and deep learning

In fact, it is the size and complication of the data sets that present the most barriers to deep learning success. McKinsey’s white paper expands further by pinpointing these data-specific issues:

The challenge of labelling training data: This often must be done manually and is necessary for supervised learning. Promising new techniques are emerging to address this challenge, such as reinforcement learning and in-stream supervision, in which data can be labeled in the course of natural usage.

The difficulty of explaining in human terms results from large and complex models: Why was a certain decision reached? Product certifications in healthcare and in the automotive and aerospace industries, for example, can be an obstacle; among other constraints, regulators often want rules and choice criteria to be clearly explainable.

The risk of bias in data and algorithms: This issue touches on concerns that are more social in nature and which could require broader steps to resolve, such as understanding how the processes used to collect training data can influence the behaviour of models they are used to train. For example, unintended biases can be introduced when training data is not representative of the larger population to which an AI model is applied. Thus, facial recognition models trained on a population of faces corresponding to the demographics of AI developers could struggle when applied to populations with more diverse characteristics. A recent report on the malicious use of AI highlights a range of security threats, from sophisticated automation of hacking to hyper-personalised political disinformation campaigns.

THE FUTURE OF DEEP LEARNING

It is clear that certain deep learning roadblocks are slowing down ambitious companies, which is why simpler machine learning algorithms are more widespread in enterprise today. Conversely, the potential of deep learning models is greater – and the technology stands to be a critical component in one particular sector currently going through drastic future-proofing: the automotive industry.

Mobility as we know it now will soon be unrecognisable as clean energy production becomes mandatory and manufacturers edge nearer to perfecting the connected, self-driving vehicle. Many consumers remain sceptical about the true viability of autonomous transport, however, raising obvious concerns around safety, vehicle intelligence and security. Deep learning could provide the answers.

As machines operating in a natural environment, self-driving vehicles rely on deep learning. Breakthrough autonomous technology uses tailored algorithms to recognise the likes of road signs or pedestrians. The unlikely may seen close to reality as high-profile automakers like Tesla and Waymo test their self-driving platforms under the public’s glare – but truthfully, where are we on the autonomous vehicle timeline?

“Even with an appropriate set of guiding principles, there are going to be a lot of perceptual challenges,” wrote robotics pioneer Rodney Brooks. “They are way beyond those that current developers have solved with deep learning networks. Human driving will probably disappear in the lifetimes of many people reading this. But it is not going to happen in the blink of an eye.”

Even with assistance from systems such as Lidar, roadways dominated by autonomous cars, vans and trucks are some way off. The example is the current state of deep learning models in microcosm; while the structure of layered machine learning algorithms and their purpose are firmly established, the scale of their potential remains hard to project.

The most recent analysis from MarketWatch paints a positive picture, however, predicting the deep learning market place to grow ‘extensively’ between 2019 and 2025, with a CAGR (Compound Annual Growth Rate) of 31.2%.

What is certain is that the value of data to every organisation is only going to increase. With that, so will the research and investment into, and honing of, AI-based technologies. Man and machine will be collaborating on a very deep level indeed.