It takes just over 10 milliseconds from you viewing something to your brain processing exactly what it is you’re looking at.

Car. Scissors. Clock. Monkey. Pen. Your brain takes an instant snapshot and instantaneously processes whatever it is you’ve locked eyes on. It’s an awesome natural operation, and for the last few years the technology to replicate that process in your smartphone has been expanding.

As culture becomes more and more dominated by images, so the necessity and hunger for the implementation of visual search has increased. There is a growing belief that the future of search will be images rather than keywords.

Google is, of course, at the forefront with its Lens app that allows your phone to work as a visual search engine. Take a photo of a restaurant and it will give you reviews, whether it delivers and whether your friends have been there. It’s all part of Google’s plan to turn your smartphone camera into a “visual browser for the world around you” and it appears to be working – research by Moz in 2017 found that image searches make up for almost 30% of all searches on Google.

Pinterest Lens is also making waves, allowing you to discover everything from recipes to new clothing styles just by taking photos on your smartphone. Earlier this year Pinterest reported that more than 600 million visual searches are carried out every month across Lens. That’s some pretty serious numbers.

Elsewhere, ASOS launched a visual search tool last year that takes a customer’s uploaded image of, say, a jumper and goes through its enormous inventory to find matches. It is therefore unsurprising that it is in the retail sector where visual search is having the greatest impact.

This is where Slyce comes in. The market leader in visual search and image recognition, Slyce is embedded in around 35 major retailers’ apps and has roughly 50 retailers who have licensed one or more of its APIs to power image recognition. They already work with more retailers than anyone else in the visual search industry, including Tommy Hilfiger, Urban Outfitters, Macy’s, Abercrombie & Fitch, JCPenney, while they’re now expanding into Europe – aggressively.

CEO Ted Mann moved to Slyce in 2016 when it acquired SnipSnap – the coupon recognition company he founded. It immediately became clear that retailers were licking their lips at the prospect of integrating visual search and snap-to-buy technology (go to a retailer’s app, launch the camera, take a picture of something, and you’re taken to a product page where you can buy it or you can find it in the store).

“One of the first retailers that we signed was [American department store] Neiman Marcus,” says Mann. “They called their snap-to-buy feature Snap-Find-Shop – that’s how they marketed it. They did it in their app and did it as well on their mobile website and it enabled people when they see a fashion item they really like – a handbag or a pair of shoes on the street and say ‘I wanna have those’ – to take a picture of it, find it, buy it.”

While a lot of retail snap-to-buy’s starting point for the consumer is uploading screenshots, the technology can be used in many different ways. Different strokes for different companies.

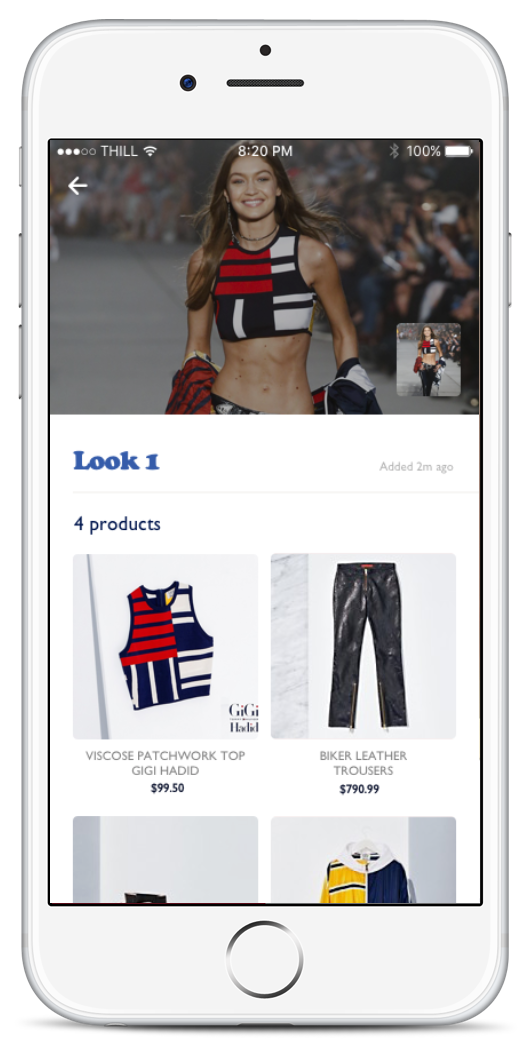

“Tommy Hilfiger came to us with a very ambitious – and at the time I thought a little bit of a risky – use case,” says Mann. “They wanted to enable consumers to be able to take a picture of a model at one of their fashion shows and be able to identify and shop the ‘look’ of that model.

“The asset we launched with them, which was called TOMMYNOW SNAP, would allow you to take a picture of Gigi Hadid, their celebrity spokesperson at the time, or upload a screenshot from a video of her and identify the look that she was wearing on the runway.

“It worked phenomenally well and continues to work to this day because when they launch a new fashion line one of the biggest challenges they have is showing people they can buy the clothes right off their runway. Consumers used to have to wait six months for the clothing to get to the store, but now they have this whole new go-to-market strategy where it’s ‘see now, buy now’.

“This app created a totally frictionless way to do that and it’s done phenomenally well. They’ve been absolutely crushing it on this new strategy and the brand has been revived in part because of it, and we’re a piece of that by activating the promotion.”

If you think where voice search was a year ago, I think visual search is actually getting faster adoption and the technology is improving at a faster rate than voice search

From fashion to something more homely: Slyce works with America’s biggest arts and crafts chain, Michaels. It wanted to use the technology to not only identify products but to suggest projects you can use the product for. For example, the customer takes a picture of a power tool. The technology identifies what brand and type of power tool it is, but also shows you, say, five examples of projects that can be built using them.

Whatever the branch of retail, the two key questions for Slyce when working with brands are always: “What would you do with this tech?” and “What sort of problems would you solve?” The answers are always varied.

“Some retailers we work with, especially in the grocery space, gravitate to list building,” says Mann. “The idea of being able to build a shopping list is very compelling. And we even work with publications. We’re actually working right now with a publication in the UK which is going to be live soon. They were very excited about identifying the ‘look’ kind of experience, identifying all the different items and pieces of clothing that this particular model was wearing.

“It’s up to the brand to tell us where this can really add value. We have some brands that frankly don’t even see that much value in embedding this technology in a consumer facing app, but instead they use it for their own internal merchandising teams.”

Mann believes there’s tremendous potential for visual search in B2B. “We have retailers that use visual search in their merchandising team to help their buyers to make better decisions about which product to buy and which product to carry next season,” he says. “We also have industrial partners that are using visual search to be able to manage their inventory and things like that.

“There are plenty of B2B applications. I think Slyce is still a fairly early-stage startup and we have about 60 employees. I think as we grow we may decide to have our own standalone B2B division. Truth be told, we do consider ourselves to be a B2B Software-as-a-Service company. It just so happens that most of the retailers we’re working with are doing it in a consumer-facing application.”

Mann believes there’s tremendous potential for visual search in B2B. “We have retailers that use visual search in their merchandising team to help their buyers to make better decisions about which product to buy and which product to carry next season,” he says. “We also have industrial partners that are using visual search to be able to manage their inventory and things like that.

“There are plenty of B2B applications. I think Slyce is still a fairly early-stage startup and we have about 60 employees. I think as we grow we may decide to have our own standalone B2B division. Truth be told, we do consider ourselves to be a B2B Software-as-a-Service company. It just so happens that most of the retailers we’re working with are doing it in a consumer-facing application.”

The growth potential is enormous, and the pace is likely to pick up rapidly. The Samsung S9 came equipped with a visual search mode in the camera. Samsung called it the Shopping Mode, and it sat alongside a portrait mode and a panorama mode. When visual searches are being integrated at that level, you know it’s not going away.

“I think the big exciting thing for me is that the technology works like it didn’t work that way five years ago,” says Mann. “I think it’s a little bit like voice search. If you think where voice search was a year ago, I think visual search is actually getting faster adoption and the technology is improving at a faster rate than voice search.

“So everybody is seeing a million Amazon Alexa devices or Echo devices, and I think you’re going to see a similar ubiquity for visual search, whether it’s on the mobile devices themselves, or connected cameras, all over people’s houses and elsewhere.”

Whereas some have described visual search technology as disruptive, Mann doesn’t particularly believe that to be the case. Instead, he sees it as supplementary.

“Occasionally you talk about technologies that are replacing something else,” he says. “I don’t think of visual search as replacing text search, I think it’s just enabling a new kind of search with pictures.

“I think that’s very exciting. It’s a catalyst, an eye-opening kind of thing for all the opportunities it’s going to create.”